Here’s an exciting ‘AI can do that now’ moment: Meta’s latest AI, Cicero, can beat human players at classic negotiation and betrayal game Diplomacy. While playing online at webDiplomacy.net, it’s achieved “more than double the average score of human players”, ranking “in the top 10 percent of participants who played more than one game”. It can figure out who needs persuading to do what, then engage with those players using impressive and effective natural language.

I won’t do a ‘taking over the world’ joke. I won’t.

Diplomacy is a stripped back board game where players compete for domination of Europe in a free-for-all version of WW1. Every turn you manoeuvre a small number of armies around the board, but more importantly, you make alliances. You tell Geoff you need to band together against Margret’s Germany, agree to support his troops into Berlin, then secretly swap your support to Margaret because she’s promised to help you storm through Paris. Diplomacy is, as Meta’s research blog post puts it, “a game about people rather than pieces”.

Savvy manoeuvring helps, of course, and that’s a strategic domain where advanced AI’s skills uncontroversially trump those of humans – one which Meta will of course play down. Nevertheless, it’s still a game where you need to convince people to cooperate with you, and cicero can do just that.

More specifics can be found on Meta’s blog post and the team’s research paper, but you can jump straight to the most impressive bits by looking at research scientist Mike Lewis’s twitter thread.

Each game, it sends and receives hundreds of messages, which must be precisely grounded in the game state, dialogue history, and its plans. We developed methods for filtering erroneous messages, letting the agent to pass for human in 40 games. Guess which player is AI here… 4/5 pic.twitter.com/8IMuepL7yf

— Mike Lewis (@ml_perception) November 22, 2022

Meta’s blog post does get into the nitty gritty of what makes Cicero tick, which is pretty interesting. Rather than improving solely through supervised learning, where an AI trains on “labeled data such as a database of human players’ actions in past games”, Cicero makes predictions and tries to stick to them:

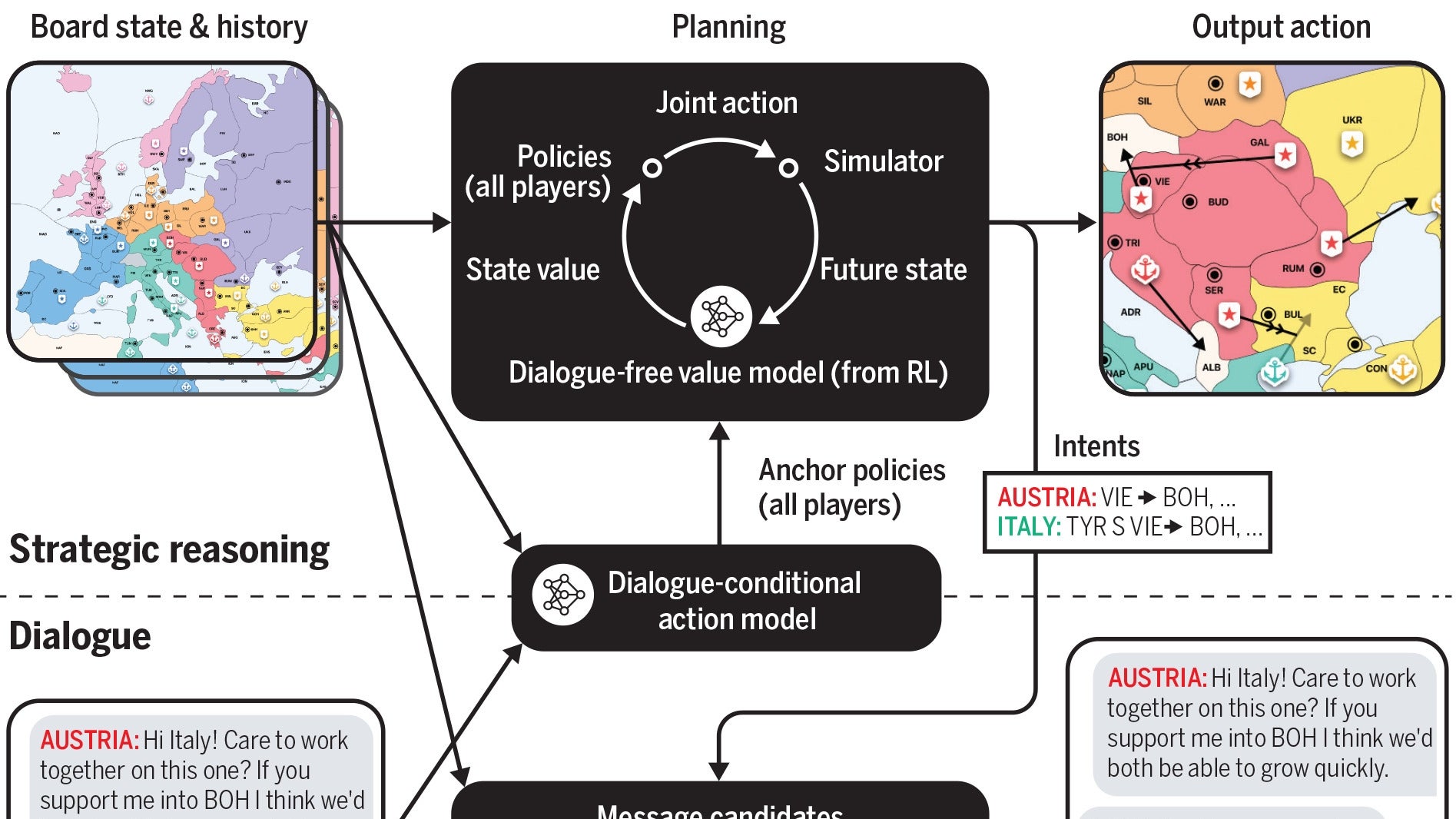

“Citerative runs an iterative planning algorithm that balances dialogue consistency with rationality. The agent first predicts everyone’s policy for the current turn based on the dialogue it has shared with other players, and also predicts what other players think the agent’s policy will be. It then runs a planning algorithm we developed called piKL, which iteratively improves these predictions by trying to choose new policies that have higher expected value given the other players’ predicted policies, while also trying to keep the new predictions close to the original policy predictions.”

Another Tweet from Lewis expands on that, saying Cicero is “designed to never intentionally backstab” but that “sometimes it changes its mind…”.

Meta suggest one future application for an AI like Cicero could be creating videogame NPCs that talk realistically while understanding your motives. Maybe we really will get to talk to the monsters.